#BigQuery Data Engineering Agent

Explore tagged Tumblr posts

Text

BigQuery Data Engineering Agent Set Ups Your Data Pipelines

BigQuery has powered analytics and business insights for data teams for years. However, developing, maintaining, and debugging data pipelines that provide such insights takes time and expertise. Google Cloud's shared vision advances BigQuery data engineering agent use to speed up data engineering.

Not just useful tools, these agents are agentic solutions that work as informed partners in your data processes. They collaborate with your team, automate tough tasks, and continually learn and adapt so you can focus on data value.

Value of data engineering agents

The data landscape changes. Organisations produce more data from more sources and formats than before. Companies must move quicker and use data to compete.

This is problematic. Common data engineering methods include:

Manual coding: Writing and updating lengthy SQL queries when establishing and upgrading pipelines can be tedious and error-prone.

Schema struggles: Mapping data from various sources to the right format is difficult, especially as schemas change.

Hard troubleshooting: Sorting through logs and code to diagnose and fix pipeline issues takes time, delaying critical insights.

Pipeline construction and maintenance need specialised skills, which limits participation and generates bottlenecks.

The BigQuery data engineering agent addresses these difficulties to speed up data pipeline construction and management.

Introduce your AI-powered data engineers

Imagine having a team of expert data engineers to design, manage, and debug pipelines 24/7 so your data team can focus on higher-value projects. Data engineering agent is experimental.

The BigQuery data engineering agent will change the game:

Automated pipeline construction and alteration

Do data intake, convert, and validate need a new pipeline? Just say what you need in normal English, and the agent will handle it. For instance:

Create a pipeline to extract data from the ‘customer_orders’ bucket, standardise date formats, eliminate duplicate entries by order ID, and dump it into a BigQuery table named ‘clean_orders’.”

Using data engineering best practices and your particular environment and context, the agent creates the pipeline, generates SQL code, and writes basic unit tests. Intelligent, context-aware automation trumps basic automation.

Should an outdated pipeline be upgraded? Tell the representative what you want changed. It analysed the code, suggested improvements, and suggested consequences on downstream activities. You review and approve modifications while the agent performs the tough lifting.

Proactive optimisation and troubleshooting

Problems with pipeline? The agent monitors pipelines, detects data drift and schema issues, and offers fixes. Like having a dedicated specialist defend your data infrastructure 24/7.

Bulk draft pipelines

Data engineers can expand pipeline production or modification by using previously taught context and information. The command line and API for automation at scale allow companies to quickly expand pipelines for different departments or use cases and customise them. After receiving command line instructions, the agent below builds bulk pipelines using domain-specific agent instructions.

How it works: Hidden intelligence

The agents employ many basic concepts to manage the complexity most businesses face:

Hierarchical context: Agents employ several knowledge sources:

Standard SQL, data formats, etc. are understood by everybody.

Understanding vertical-specific industry conventions (e.g., healthcare or banking data formats)

Knowledge of your department or firm's business environment, data architecture, naming conventions, and security laws

Information about data pipeline source and target schemas, transformations, and dependencies

Continuous learning: Agents learn from user interactions and workflows rather than following orders. As agents work in your environment, their skills grow.

Collective, multi-agent environment

BigQuery data engineering agents work in a multi-agent environment to achieve complex goals by sharing tasks and cooperating:

Ingestion agents efficiently process data from several sources.

A transformation agent builds reliable, effective data pipelines.

Validation agents ensure data quality and consistency.

Troubleshooters aggressively find and repair issues.

Dataplex metadata powers a data quality agent that monitors data and alerts of abnormalities.

Google Cloud is focussing on intake, transformation, and debugging for now, but it plans to expand these early capabilities to other important data engineering tasks.

Workflow your way

Whether you prefer the BigQuery Studio UI, your chosen IDE for code authoring, or the command line for pipeline management, it wants to meet you there. The data engineering agent is now only available in BigQuery Studio's pipeline editor and API/CLI. It wants to make it available elsewhere.

Your data engineer and workers

Artificial Intelligent-powered bots are only beginning to change how data professionals interact with and value their data. The BigQuery data engineering agent allows data scientists, engineers, and analysts to do more, faster, and more reliably. These agents are intelligent coworkers that automate tedious tasks, optimise processes, and boost productivity. Google Cloud starts with shifting data from Bronze to Silver in a data lake and grows from there.

With Dataplex, BigQuery ML, and Vertex AI, the BigQuery data engineering agent can transform how organisations handle, analyse, and value their data. By empowering data workers of all skill levels, promoting collaboration, and automating challenging tasks, these agents are ushering in a new era of data-driven creativity.

Ready to start?

Google Cloud is only starting to build an intelligent, self-sufficient data platform. It regularly trains data engineering bots to be more effective and observant collaborators for all your data needs.

The BigQuery data engineering agent will soon be available. It looks forward to helping you maximise your data and integrating it into your data engineering processes.

#technology#technews#govindhtech#news#technologynews#Data engineering agent#multi-agent environment#data engineering team#BigQuery Data Engineering Agent#BigQuery#Data Pipelines

0 notes

Text

Soham Mazumdar, Co-Founder & CEO of WisdomAI – Interview Series

New Post has been published on https://thedigitalinsider.com/soham-mazumdar-co-founder-ceo-of-wisdomai-interview-series/

Soham Mazumdar, Co-Founder & CEO of WisdomAI – Interview Series

Soham Mazumdar is the Co-Founder and CEO of WisdomAI, a company at the forefront of AI-driven solutions. Prior to founding WisdomAI in 2023, he was Co-Founder and Chief Architect at Rubrik, where he played a key role in scaling the company over a 9-year period. Soham previously held engineering leadership roles at Facebook and Google, where he contributed to core search infrastructure and was recognized with the Google Founder’s Award. He also co-founded Tagtile, a mobile loyalty platform acquired by Facebook. With two decades of experience in software architecture and AI innovation, Soham is a seasoned entrepreneur and technologist based in the San Francisco Bay Area.

WisdomAI is an AI-native business intelligence platform that helps enterprises access real-time, accurate insights by integrating structured and unstructured data through its proprietary “Knowledge Fabric.” The platform powers specialized AI agents that curate data context, answer business questions in natural language, and proactively surface trends or risks—without generating hallucinated content. Unlike traditional BI tools, WisdomAI uses generative AI strictly for query generation, ensuring high accuracy and reliability. It integrates with existing data ecosystems and supports enterprise-grade security, with early adoption by major firms like Cisco and ConocoPhillips.

You co-founded Rubrik and helped scale it into a major enterprise success. What inspired you to leave in 2023 and build WisdomAI—and was there a particular moment that clarified this new direction?

The enterprise data inefficiency problem was staring me right in the face. During my time at Rubrik, I witnessed firsthand how Fortune 500 companies were drowning in data but starving for insights. Even with all the infrastructure we built, less than 20% of enterprise users actually had the right access and know-how to use data effectively in their daily work. It was a massive, systemic problem that no one was really solving.

I’m also a builder by nature – you can see it in my path from Google to Tagtile to Rubrik and now WisdomAI. I get energized by taking on fundamental challenges and building solutions from the ground up. After helping scale Rubrik to enterprise success, I felt that entrepreneurial pull again to tackle something equally ambitious.

Last but not least, the AI opportunity was impossible to ignore. By 2023, it became clear that AI could finally bridge that gap between data availability and data usability. The timing felt perfect to build something that could democratize data insights for every enterprise user, not just the technical few.

The moment of clarity came when I realized we could combine everything I’d learned about enterprise data infrastructure at Rubrik with the transformative potential of AI to solve this fundamental inefficiency problem.

WisdomAI introduces a “Knowledge Fabric” and a suite of AI agents. Can you break down how this system works together to move beyond traditional BI dashboards?

We’ve built an agentic data insights platform that works with data where it is – structured, unstructured, and even “dirty” data. Rather than asking analytics teams to run reports, business managers can directly ask questions and drill into details. Our platform can be trained on any data warehousing system by analyzing query logs.

We’re compatible with major cloud data services like Snowflake, Microsoft Fabric, Google’s BigQuery, Amazon’s Redshift, Databricks, and Postgres and also just document formats like excel, PDF, powerpoint etc.

Unlike conventional tools designed primarily for analysts, our conversational interface empowers business users to get answers directly, while our multi-agent architecture enables complex queries across diverse data systems.

You’ve emphasized that WisdomAI avoids hallucinations by separating GenAI from answer generation. Can you explain how your system uses GenAI differently—and why that matters for enterprise trust?

Our AI-Ready Context Model trains on the organization’s data to create a universal context understanding that answers questions with high semantic accuracy while maintaining data privacy and governance. Furthermore, we use generative AI to formulate well-scoped queries that allow us to extract data from the different systems, as opposed to feeding raw data into the LLMs. This is crucial for addressing hallucination and safety concerns with LLMs.

You coined the term “Agentic Data Insights Platform.” How is agentic intelligence different from traditional analytics tools or even standard LLM-based assistants?

Traditional BI stacks slow decision-making because every question has to fight its way through disconnected data silos and a relay team of specialists. When a chief revenue officer needs to know how to close the quarter, the answer typically passes through half a dozen hands—analysts wrangling CRM extracts, data engineers stitching files together, and dashboard builders refreshing reports—turning a simple query into a multi-day project.

Our platform breaks down those silos and puts the full depth of data one keystroke away, so the CRO can drill from headline metrics all the way to row-level detail in seconds.

No waiting in the analyst queue, no predefined dashboards that can’t keep up with new questions—just true self-service insights delivered at the speed the business moves.

How do you ensure WisdomAI adapts to the unique data vocabulary and structure of each enterprise? What role does human input play in refining the Knowledge Fabric?

Working with data where and how it is – that’s essentially the holy grail for enterprise business intelligence. Traditional systems aren’t built to handle unstructured data or “dirty” data with typos and errors. When information exists across varied sources – databases, documents, telemetry data – organizations struggle to integrate this information cohesively.

Without capabilities to handle these diverse data types, valuable context remains isolated in separate systems. Our platform can be trained on any data warehousing system by analyzing query logs, allowing it to adapt to each organization’s unique data vocabulary and structure.

You’ve described WisdomAI’s development process as ‘vibe coding’—building product experiences directly in code first, then iterating through real-world use. What advantages has this approach given you compared to traditional product design?

“Vibe coding” is a significant shift in how software is built where developers leverage the power of AI tools to generate code simply by describing the desired functionality in natural language. It’s like an intelligent assistant that does what you want the software to do, and it writes the code for you. This dramatically reduces the manual effort and time traditionally required for coding.

For years, the creation of digital products has largely followed a familiar script: meticulously plan the product and UX design, then execute the development, and iterate based on feedback. The logic was clear because investing in design upfront minimizes costly rework during the more expensive and time-consuming development phase. But what happens when the cost and time to execute that development drastically shrinks? This capability flips the traditional development sequence on its head. Suddenly, developers can start building functional software based on a high-level understanding of the requirements, even before detailed product and UX designs are finalized.

With the speed of AI code generation, the effort involved in creating exhaustive upfront designs can, in certain contexts, become relatively more time-consuming than getting a basic, functional version of the software up and running. The new paradigm in the world of vibe coding becomes: execute (code with AI), then adapt (design and refine).

This approach allows for incredibly early user validation of the core concepts. Imagine getting feedback on the actual functionality of a feature before investing heavily in detailed visual designs. This can lead to more user-centric designs, as the design process is directly informed by how users interact with a tangible product.

At WisdomAI, we actively embrace AI code generation. We’ve found that by embracing rapid initial development, we can quickly test core functionalities and gather invaluable user feedback early in the process, live on the product. This allows our design team to then focus on refining the user experience and visual design based on real-world usage, leading to more effective and user-loved products, faster.

From sales and marketing to manufacturing and customer success, WisdomAI targets a wide spectrum of business use cases. Which verticals have seen the fastest adoption—and what use cases have surprised you in their impact?

We’ve seen transformative results with multiple customers. For F500 oil and gas company, ConocoPhillips, drilling engineers and operators now use our platform to query complex well data directly in natural language. Before WisdomAI, these engineers needed technical help for even basic operational questions about well status or job performance. Now they can instantly access this information while simultaneously comparing against best practices in their drilling manuals—all through the same conversational interface. They evaluated numerous AI vendors in a six-month process, and our solution delivered a 50% accuracy improvement over the closest competitor.

At a hyper growth Cyber Security company Descope, WisdomAI is used as a virtual data analyst for Sales and Finance. We reduced report creation time from 2-3 days to just 2-3 hours—a 90% decrease. This transformed their weekly sales meetings from data-gathering exercises to strategy sessions focused on actionable insights. As their CRO notes, “Wisdom AI brings data to my fingertips. It really democratizes the data, bringing me the power to go answer questions and move on with my day, rather than define your question, wait for somebody to build that answer, and then get it in 5 days.” This ability to make data-driven decisions with unprecedented speed has been particularly crucial for a fast-growing company in the competitive identity management market.

A practical example: A chief revenue officer asks, “How am I going to close my quarter?” Our platform immediately offers a list of pending deals to focus on, along with information on what’s delaying each one – such as specific questions customers are waiting to have answered. This happens with five keystrokes instead of five specialists and days of delay.

Many companies today are overloaded with dashboards, reports, and siloed tools. What are the most common misconceptions enterprises have about business intelligence today?

Organizations sit on troves of information yet struggle to leverage this data for quick decision-making. The challenge isn’t just about having data, but working with it in its natural state – which often includes “dirty” data not cleaned of typos or errors. Companies invest heavily in infrastructure but face bottlenecks with rigid dashboards, poor data hygiene, and siloed information. Most enterprises need specialized teams to run reports, creating significant delays when business leaders need answers quickly. The interface where people consume data remains outdated despite advancements in cloud data engines and data science.

Do you view WisdomAI as augmenting or eventually replacing existing BI tools like Tableau or Looker? How do you fit into the broader enterprise data stack?

We’re compatible with major cloud data services like Snowflake, Microsoft Fabric, Google’s BigQuery, Amazon’s Redshift, Databricks, and Postgres and also just document formats like excel, PDF, powerpoint etc. Our approach transforms the interface where people consume data, which has remained outdated despite advancements in cloud data engines and data science.

Looking ahead, where do you see WisdomAI in five years—and how do you see the concept of “agentic intelligence” evolving across the enterprise landscape?

The future of analytics is moving from specialist-driven reports to self-service intelligence accessible to everyone. BI tools have been around for 20+ years, but adoption hasn’t even reached 20% of company employees. Meanwhile, in just twelve months, 60% of workplace users adopted ChatGPT, many using it for data analysis. This dramatic difference shows the potential for conversational interfaces to increase adoption.

We’re seeing a fundamental shift where all employees can directly interrogate data without technical skills. The future will combine the computational power of AI with natural human interaction, allowing insights to find users proactively rather than requiring them to hunt through dashboards.

Thank you for the great interview, readers who wish to learn more should visit WisdomAI.

#2023#adoption#agent#agents#ai#AI AGENTS#ai code generation#AI innovation#ai tools#Amazon#Analysis#Analytics#approach#architecture#assistants#bi#bi tools#bigquery#bridge#Building#Business#Business Intelligence#CEO#challenge#chatGPT#Cisco#Cloud#cloud data#code#code generation

0 notes

Text

Google’s BigQuery and Looker get agents to simplify analytics tasks

Google has added new agents to its BigQuery data warehouse and Looker business intelligence platform to help data practitioners automate and simplify analytics tasks. The data agents, announced at the company’s Google Cloud Next conference, include a data engineering and data science agent — both of which have been made generally available. The data engineering agent, which is embedded inside…

0 notes

Text

Key Technologies and Tools to Build AI Agents Effectively

The development of AI agents has revolutionized how businesses operate, offering automation, enhanced customer interactions, and data-driven insights. Building an effective AI agent requires a combination of the right technologies and tools. This blog delves into the key technologies and tools essential for creating intelligent and responsive AI agents that can drive business success.

1. Machine Learning Frameworks

Machine learning frameworks provide the foundational tools needed to develop, train, and deploy AI models.

TensorFlow: An open-source framework developed by Google, TensorFlow is widely used for building deep learning models. It offers flexibility and scalability, making it suitable for both research and production environments.

PyTorch: Developed by Facebook, PyTorch is known for its ease of use and dynamic computational graph, which makes it ideal for rapid prototyping and research.

Scikit-learn: A versatile library for machine learning in Python, Scikit-learn is perfect for developing traditional machine learning models, including classification, regression, and clustering.

2. Natural Language Processing (NLP) Tools

NLP tools are crucial for enabling AI agents to understand and interact using human language.

spaCy: An open-source library for advanced NLP in Python, spaCy offers robust support for tokenization, parsing, and named entity recognition, making it ideal for building conversational AI agents.

NLTK (Natural Language Toolkit): A comprehensive library for building NLP applications, NLTK provides tools for text processing, classification, and sentiment analysis.

Transformers by Hugging Face: This library offers state-of-the-art transformer models like BERT, GPT-4, and others, enabling powerful language understanding and generation capabilities for AI agents.

3. AI Development Platforms

AI development platforms streamline the process of building, training, and deploying AI agents by providing integrated tools and services.

Dialogflow: Developed by Google, Dialogflow is a versatile platform for building conversational agents and chatbots. It offers natural language understanding, multi-platform integration, and customizable responses.

Microsoft Bot Framework: This framework provides a comprehensive set of tools for building intelligent bots that can interact across various channels, including websites, messaging apps, and voice assistants.

Rasa: An open-source framework for building contextual AI assistants, Rasa offers flexibility and control over your AI agent’s conversational capabilities, making it suitable for customized and complex applications.

4. Cloud Computing Services

Cloud computing services provide the necessary infrastructure and scalability for developing and deploying AI agents.

AWS (Amazon Web Services): AWS offers a suite of AI and machine learning services, including SageMaker for model building and deployment, and Lex for building conversational interfaces.

Google Cloud Platform (GCP): GCP provides tools like AI Platform for machine learning, Dialogflow for conversational agents, and AutoML for automated model training.

Microsoft Azure: Azure’s AI services include Azure Machine Learning for model development, Azure Bot Service for building intelligent bots, and Cognitive Services for adding pre-built AI capabilities.

5. Data Management and Processing Tools

Effective data management and processing are essential for training accurate and reliable AI agents.

Pandas: A powerful data manipulation library in Python, Pandas is essential for cleaning, transforming, and analyzing data before feeding it into AI models.

Apache Spark: An open-source unified analytics engine, Spark is ideal for large-scale data processing and real-time analytics, enabling efficient handling of big data for AI training.

Data Lakes and Warehouses: Solutions like Amazon S3, Google BigQuery, and Snowflake provide scalable storage and efficient querying capabilities for managing vast amounts of data.

6. Development and Collaboration Tools

Collaboration and efficient development practices are crucial for successful AI agent projects.

GitHub: A platform for version control and collaboration, GitHub allows multiple developers to work together on AI projects, manage code repositories, and track changes.

Jupyter Notebooks: An interactive development environment, Jupyter Notebooks are widely used for exploratory data analysis, model prototyping, and sharing insights.

Docker: Containerization with Docker ensures that your AI agent’s environment is consistent across development, testing, and production, facilitating smoother deployments.

7. Testing and Deployment Tools

Ensuring the reliability and performance of AI agents is critical before deploying them to production.

CI/CD Pipelines: Continuous Integration and Continuous Deployment (CI/CD) tools like Jenkins, GitLab CI, and GitHub Actions automate the testing and deployment process, ensuring that updates are seamlessly integrated.

Monitoring Tools: Tools like Prometheus, Grafana, and AWS CloudWatch provide real-time monitoring and alerting, helping you maintain the performance and reliability of your AI agents post-deployment.

A/B Testing Platforms: Platforms like Optimizely and Google Optimize enable you to conduct A/B tests, allowing you to evaluate different versions of your AI agent and optimize its performance based on user interactions.

Best Practices for Building AI Agents

Start with Clear Objectives: Define the specific tasks and goals your AI agent should achieve to guide the development process.

Ensure Data Quality: Use high-quality, relevant data for training your AI models to enhance accuracy and reliability.

Prioritize User Experience: Design your AI agent with the end-user in mind, ensuring intuitive interactions and valuable responses.

Maintain Security and Privacy: Implement robust security measures to protect user data and comply with relevant regulations.

Iterate and Improve: Continuously monitor your AI agent’s performance and make iterative improvements based on feedback and data insights.

Conclusion

Building an effective AI agent involves a strategic blend of the right technologies, tools, and best practices. By leveraging machine learning frameworks, NLP tools, AI development platforms, cloud services, and robust data management systems, businesses can create intelligent and responsive AI agents that drive operational efficiency and enhance customer experiences. Embracing these technologies not only streamlines the development process but also ensures that your AI agents are scalable, reliable, and aligned with your business objectives.

Whether you’re looking to build a customer service chatbot, a virtual assistant, or an advanced data analysis tool, following a structured approach and utilizing the best available tools will set you on the path to success. Start building your AI agent today and unlock the transformative potential of artificial intelligence for your business.

0 notes

Text

Take Off İstanbul Day # 3: The Big Day for Startups

Take Off İstanbul Day 3 was very excited for startups. 50 startups selected with mentor votes made their presentation on Day 3. Startups in different verticals made their pitch deck to the jury of the leading Turkish and global mentors in different sectors. Besides that, presentations of Google and Invest in Turkey was pretty catchy for participants.

Why Invest In Turkey

Take Off İstanbul #DAY 3 started with Chief Project Director of Invest in Turkey Necmettin Kaymaz’s presentation. He talked about investing in Turkey and the support of the government for entrepreneurs. At the end of the presentation, Necmettin Kaymaz answered participants’ questions.

Google Training Session

Day 3 also included a Google training session in the afternoon. Trainer Devrim Ekmekçi was up first to talk about Measuring and Targeting for Startups, where he gave valuable recommendations on usage of Adwords & analytics, KPI Dashboards, and measurement of campaigns. Next up was trainer Yusuf Sarıgöz, who introduced Qwiklabs, Google’s online Cloud Training portal, before completing a hands-on session on Machine Learning.

Would you like to try Qwiklabs and receive Google Cloud training for free? Google has set up Cloud Study Jam-a-thon where you can learn more about Kubernetes, Machine Learning and BigQuery alongside many other Cloud concepts – enroll for free through this address (in Turkish) https://events.withgoogle.com/cloud-study-jam-a-thon/

Startup Pitches

In the afternoon, startup pitches are started. 49 semi-finalist startups made their pitch deck to the jury of the leading Turkish and global mentors in different sectors.

The semi-finalist startups that made a presentation are:

Auto Train Brain

Auto Train Brain improves the cognitive abilities of dyslexics at home reliably Website: www.autotrainbrain.com

Bren

Flexible Hybrid Nanogenerator running entirely industrial IoT device and wireless sensor without using a battery with battery-less sensor mode

Car4Future

Energy sharing network and transfer hardware developed with blockchain technology for electric vehicles and autonomous cars. Website: car4future.tech

Comparisonator

Comparisonator is a unique tool to compare players’ and teams’ performance data around the world. It assists scouts, sports directors, coaches, agents and players to make better and quicker decisions. Website: https://www.comparisonator.com

ConnectION

Connect-ION attracts attention to being an enterprise that starts out to transform about 1 billion cars in the world without autonomous vehicle technology to an autonomous car. It is aimed to make it available for non-autonomous vehicle owners to have an autonomous vehicle after an easy installation procedure without throwing their current car investments away. Website: www.connect-ion.tech

eMahkeme

“eMahkeme Online Incompatibility Solution Portal” aims to solve users’ judicial problems fast, safely and economically. Website: https://www.emahkeme.com.tr/

Fanaliz

Fanaliz helps companies measure credit risk in an easy, flexible and affordable way by applying algorithms based on data analytics. Website: https://www.fanaliz.com

FilameX

FilameX is a mini filament machine focused on the recycling of waste plastics and filaments to high-quality filaments. Website: https://www.3dfilamex.com/

HEXTECH GREEN

Develops and produces smart agriculture machines aim for indoor agriculture technology. Website: hextechgreen.com

Iltema

We are an R&D company that dealing with technical textiles and brings an innovative approach to heating needs in industrial areas, make them available for OEMs to reach the end-users. Website: www.iltema.com.tr

InMapper

inMapper is an interactive indoor map platform for large buildings such as airports,malls, offices. Website: https://inmapper.com/

Karbonol

Fuel from Cigarette Butt: Karbonol fulfills its production costs, and can solve the stub recycle problem. Website: www.karbonol.com

Meşk

Meşk Tech. is a İstanbul-based music technology company that provides unique software to revolutionize the eastern music education. Any person can learn and practice an instrument or develop vocal skills via using our Meşk App. Website: www.meskteknoloji.com

PACHA

PACHA; protein and collagen chips, %100 Natural and tasty functional food. Website: www.pachacips.com

PDAccess

PDAccess is a cloud security software that helps companies manage their clouds secure, agile and compliant. Website: https://www.pdaccess.com

Pirahas

A magical and revolutionary fully automated software at an unbelievable price for Amazon sellers. Website: www.pirahas.com

Respo Gadgets

Respo Gadgets is an Istanbul based medical device company that develops an innovative, silent, portable and comfortable oral device to be used in mild to moderate level obstructive sleep apnea and snoring treatment. Website: https://www.dormio.com.tr/

SafeTech

Safe Tech is an IDS and an IPS system that protects SCADA/Industrial IoT systems against cyber-attacks and operational threats. Website: www.smartscadasiem.com

Secpoint

To make and new perspective of cyber intelligence Website: https://www.secpoint.com.tr/

SFM Yazılım

SFM Software is a fast and accurate cost estimation software for the product to be manufactured by Small and Medium-sized Enterprises (SMEs) in the machine manufacturing industry and productivity software which increases the company’s productivity by up to 15% at no extra cost to the manufacturer. Website: www.sfmyazilim.com

T Fashion

T-Fashion is a platform that aims to provide customized live analytics and fashion trend insights to companies that operate in the textile industry via analyzing thousands of social media accounts by the use of sophisticated deep learning algorithms Website: https://tfashion.ai

Tetis Bio

TETIS BIOTECH is a biomaterial company producing high quality marine bioactive compound products for industries such as healthcare and cosmetics. Website: https://www.tetisbiotech.com/

Üretken Akademi

Training-oriented startup acceleration program that supports high school and university students to start-up. Website: uretkenakademi.com

User Vision

Uservision is a platform helping brands to acquire agile implicit, explicit and subconscious qualitative insights from their target audience leveraging AI. Website: www.user.vision

ARAIG Global

ARAIG Global is a new international company and partner of IF-Tech Canada. ARAIG Global is a licensed company for production and global sales of the product- Gaming vest. We have the exclusivity to produce, distribute and market the Gaming vest that gives gamers an experience unheard of. Website: araig.com

Augmental

Augmental is an educational technology application targeting middle to high school students where course materials are adapted to each student’s learning abilities using Artificial Intelligence and student engagement tools. Website: www.augmental.education

Beambot

BeamBot offers artificial intelligence software that utilizes the already existing CCTV feed for autonomous control of any facility in order to optimize operation expenses, safety and security. Website: www.beambot.co

BlueVisor

BlueVisor develops AI wealth management platform to lift financial burden so that people can enjoy a better life. BlueVisor mainly focuses on the B2B market with SaaS (Software as a Service) business model or platform. BlueVisor has made tractions already in a short period of company history. Website: bluevisor.kr&entrusta.ai

Cardilink

Displays the status of devices in a dashboard in real time and we analyze your data for the long-term monitoring and quality reports. Alarms and any occurring issues will be reported to you before the product will be used with a patient. Additional benefit for you: Data Analytics of your product for clinical studies. Website: www.cardi-link.com

DRD Biotech

DRD Biotech develops diagnostic blood tests for brain damage such as Stroke, Concussion, and Epilepsy. Website: www.drdbiotech.ru

Edubook

Edubook is an Interactive Learning Community that Provides a Safe Space for Educators and Learners to connect, collaborate, and communicate among each others. Website: WWW.EDUBOOK.ME

FIXAR AERO

FIXAR is an Autonomous commercial drone with unique aerodynamics. Website: WWW.FIXAR-AERO.RU

Hot-wifi

Guest wi-fi networks with marketing options & wi-fi analytics Website: hot-wifi.ru

Inspector Cloud

Inspector Cloud Website: http://www.inspector-cloud.com

InstaCare

InstaCare is transforming the typical healthcare infrastructure of Pakistan into a digital healthcare infrastructure in order to make it more accessible and reliable from the base of the pyramid to save lives over 2,000,000 people every year who die because of the current outdated infrastructure of the country. Website: https://instacare.pk/

Intixel

Intixel is building AI-based products to empower physician decisions as a second eye. Our Team is a finely selected Artificial Intelligence experts, engineers, computer scientists, and medical imaging experts. Website: www.intixel.com

Mazboot

Mazboot is the first Arabic in-app coach for helping diabetic patients self-manage their disease and get a consultation from doctors Website: https://www.mazbootapp.com/

MIFOOD

MiFood is a company that provides automation and robotization services for restaurants. Website: https://mifood.es

Monicont

Monicont is a next-generation utility, transforming lives and unlocking potential through access to energy. Website: https://monicont.com/

Otus Technologies

Otus Technologies develops a novel software tool for aircraft flight loads analysis and dynamic solutions. Website: https://otustech.com.pk

PakVitae

PakVitae provides lifetime affordable filters that can purify water to 99.9999% without any power requirement. Our technology revolutionizes the conventional water treatment processes. Website: https://www.pakvitae.org

Pharma Global

The system of technological solutions based on BigData and AI (e-commerce, e-learning, and marketplace platforms) that reduce costs for market access for pharmaceutical manufacturers. Website: https://pharma.global/

RAAV Techlabs

Data analytics and quality analysis instrumentation company, building devices to check the internal quality of agricultural produce and dairy, by non-invasive and non-destructive method, to give important information like nutritional and adulterants present in the produce. Website: www.raav.in

Torever

Torever allows travelers to plan their trips in a minute for free, and take back control of their journeys with accurate info and navigation on the go! Website: app.torever.com

UIQ Travel

Connects solo travelers with shared interests in-flight and in-destination. Website: https://uiqt.com

Usedesk

OmniAI-powered omnichannel helpdesk platform which supports customers and sales. Website: usedesk.com

UVL Robotics

UVL develops and produces of multi-rotary type UAVs with vertical take-off and landing on hydrogen-air fuel cells. The company was founded by leading experts in the field of aviation and robotics systems design with experience in scientific and industrial enterprises. Website: www.uvl.io

XYLEXA

XYLEXA is an early-stage company developing Artificial Intelligence(AI) based cloud the application which helps the radiologist in early, accurate and cost-effective diagnosis of breast cancer through mammograms. Website: WWW.XYLEXA.COM

1 note

·

View note

Text

Leverage Python and Google Cloud to extract meaningful SEO insights from server log data

For my first post on Search Engine Land, I’ll start by quoting Ian Lurie:

Log file analysis is a lost art. But it can save your SEO butt!

Wise words.

However, getting the data we need out of server log files is usually laborious:

Gigantic log files require robust data ingestion pipelines, a reliable cloud storage infrastructure, and a solid querying system

Meticulous data modeling is also needed in order to convert cryptic, raw logs data into legible bits, suitable for exploratory data analysis and visualization

In the first post of this two-part series, I will show you how to easily scale your analyses to larger datasets, and extract meaningful SEO insights from your server logs.

All of that with just a pinch of Python and a hint of Google Cloud!

Here’s our detailed plan of action:

#1 – I’ll start by giving you a bit of context:

What are log files and why they matter for SEO

How to get hold of them

Why Python alone doesn’t always cut it when it comes to server log analysis

#2 – We’ll then set things up:

Create a Google Cloud Platform account

Create a Google Cloud Storage bucket to store our log files

Use the Command-Line to convert our files to a compliant format for querying

Transfer our files to Google Cloud Storage, manually and programmatically

#3 – Lastly, we’ll get into the nitty-gritty of Pythoning – we will:

Query our log files with Bigquery, inside Colab!

Build a data model that makes our raw logs more legible

Create categorical columns that will enhance our analyses further down the line

Filter and export our results to .csv

In part two of this series (available later this year), we’ll discuss more advanced data modeling techniques in Python to assess:

Bot crawl volume

Crawl budget waste

Duplicate URL crawling

I’ll also show you how to aggregate and join log data to Search Console data, and create interactive visualizations with Plotly Dash!

Excited? Let’s get cracking!

System requirements

We will use Google Colab in this article. No specific requirements or backward compatibility issues here, as Google Colab sits in the cloud.

Downloadable files

The Colab notebook can be accessed here

The log files can be downloaded on Github – 4 sample files of 20 MB each, spanning 4 days (1 day per file)

Be assured that the notebook has been tested with several million rows at lightning speed and without any hurdles!

Preamble: What are log files?

While I don’t want to babble too much about what log files are, why they can be invaluable for SEO, etc. (heck, there are many great articles on the topic already!), here’s a bit of context.

A server log file records every request made to your web server for content.

Every. Single. One.

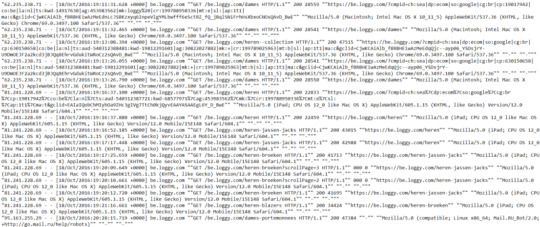

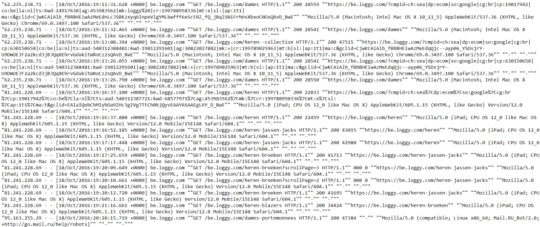

In their rawest forms, logs are indecipherable, e.g. here are a few raw lines from an Apache webserver:

Daunting, isn’t it?

Raw logs must be “cleansed” in order to be analyzed; that’s where data modeling kicks in. But more on that later.

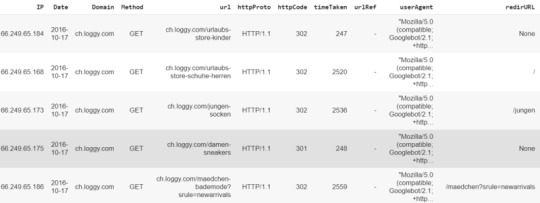

Whereas the structure of a log file mainly depends on the server (Apache, Nginx, IIS etc…), it has evergreen attributes:

Server IP

Date/Time (also called timestamp)

Method (GET or POST)

URI

HTTP status code

User-agent

Additional attributes can usually be included, such as:

Referrer: the URL that ‘linked’ the user to your site

Redirected URL, when a redirect occurs

Size of the file sent (in bytes)

Time taken: the time it takes for a request to be processed and its response to be sent

Why are log files important for SEO?

If you don’t know why they matter, read this. Time spent wisely!

Accessing your log files

If you’re not sure where to start, the best is to ask your (client’s) Web Developer/DevOps if they can grant you access to raw server logs via FTP, ideally without any filtering applied.

Here are the general guidelines to find and manage log data on the three most popular servers:

Apache log files (Linux)

NGINX log files (Linux)

IIS log files (Windows)

We’ll use raw Apache files in this project.

Why Pandas alone is not enough when it comes to log analysis

Pandas (an open-source data manipulation tool built with Python) is pretty ubiquitous in data science.

It’s a must to slice and dice tabular data structures, and the mammal works like a charm when the data fits in memory!

That is, a few gigabytes. But not terabytes.

Parallel computing aside (e.g. Dask, PySpark), a database is usually a better solution for big data tasks that do not fit in memory. With a database, we can work with datasets that consume terabytes of disk space. Everything can be queried (via SQL), accessed, and updated in a breeze!

In this post, we’ll query our raw log data programmatically in Python via Google BigQuery. It’s easy to use, affordable and lightning-fast – even on terabytes of data!

The Python/BigQuery combo also allows you to query files stored on Google Cloud Storage. Sweet!

If Google is a nay-nay for you and you wish to try alternatives, Amazon and Microsoft also offer cloud data warehouses. They integrate well with Python too:

Amazon:

AWS S3

Redshift

Microsoft:

Azure Storage

Azure data warehouse

Azure Synaps

Create a GCP account and set-up Cloud Storage

Both Google Cloud Storage and BigQuery are part of Google Cloud Platform (GCP), Google’s suite of cloud computing services.

GCP is not free, but you can try it for a year with $300 credits, with access to all products. Pretty cool.

Note that once the trial expires, Google Cloud Free Tier will still give you access to most Google Cloud resources, free of charge. With 5 GB of storage per month, it’s usually enough if you want to experiment with small datasets, work on proof of concepts, etc…

Believe me, there are many. Great. Things. To. Try!

You can sign-up for a free trial here.

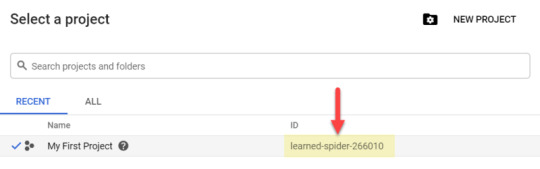

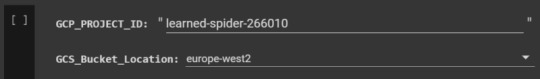

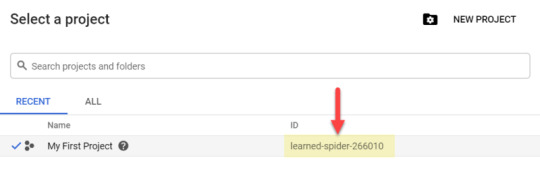

Once you have completed sign-up, a new project will be automatically created with a random, and rather exotic, name – e.g. mine was “learned-spider-266010“!

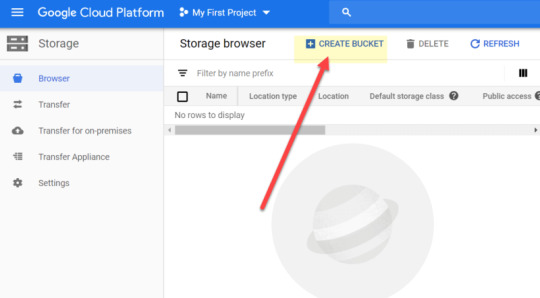

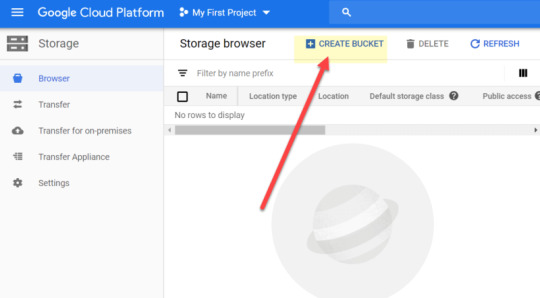

Create our first bucket to store our log files

In Google Cloud Storage, files are stored in “buckets”. They will contain our log files.

To create your first bucket, go to storage > browser > create bucket:

The bucket name has to be unique. I’ve aptly named mine ‘seo_server_logs’!

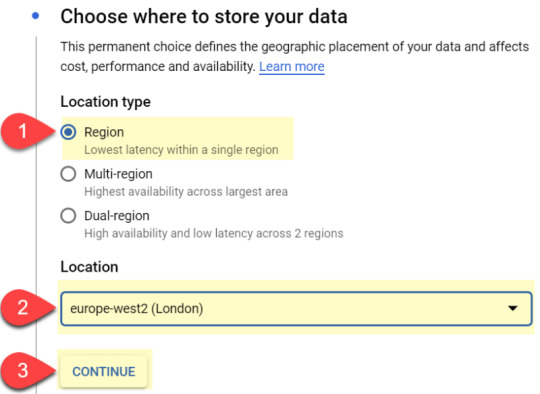

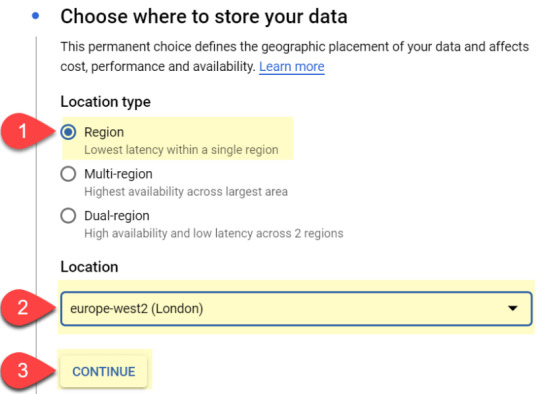

We then need to choose where and how to store our log data:

#1 Location type – ‘Region’ is usually good enough.

#2 Location – As I’m based in the UK, I’ve selected ‘Europe-West2’. Select your nearest location

#3 Click on ‘continue’

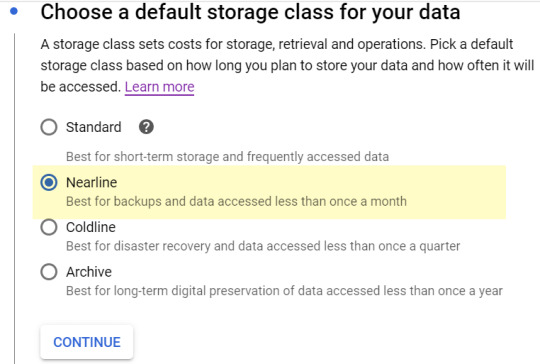

Default storage class: I’ve had good results with ‘nearline‘. It is cheaper than standard, and the data is retrieved quickly enough:

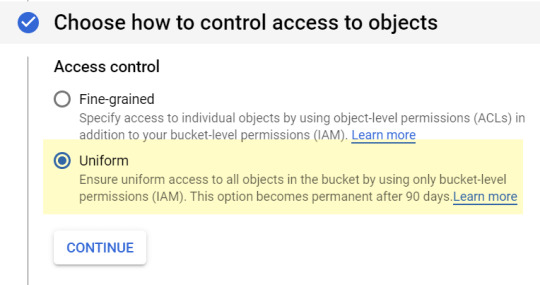

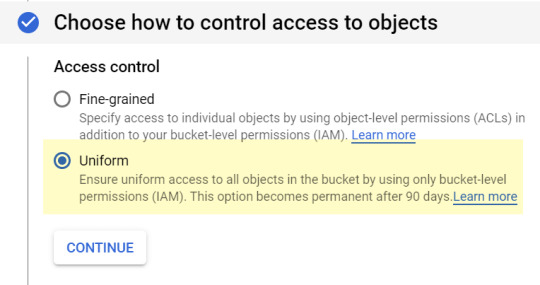

Access to objects: “Uniform” is fine:

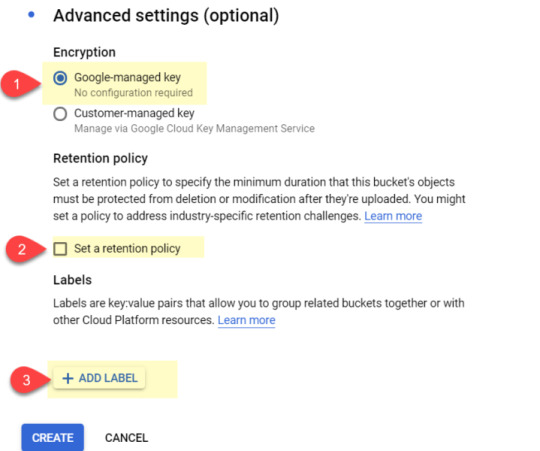

Finally, in the “advanced settings” block, select:

#1 – Google-managed key

#2 – No retention policy

#3 – No need to add a label for now

When you’re done, click “‘create.”

You’ve created your first bucket! Time to upload our log data.

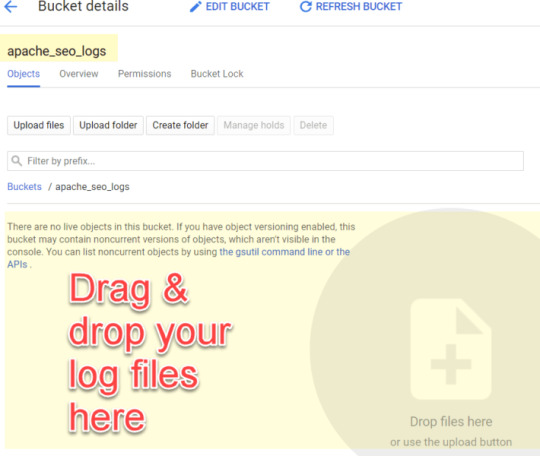

Adding log files to your Cloud Storage bucket

You can upload as many files as you wish, whenever you want to!

The simplest way is to drag and drop your files to Cloud Storage’s Web UI, as shown below:

Yet, if you really wanted to get serious about log analysis, I’d strongly suggest automating the data ingestion process!

Here are a few things you can try:

Cron jobs can be set up between FTP servers and Cloud Storage infrastructures:

Gsutil, if on GCP

SFTP Transfers, if on AWS

FTP managers like Cyberduck also offer automatic transfers to storage systems, too

More data ingestion tips here (AppEngine, JSON API etc.)

A quick note on file formats

The sample files uploaded in Github have already been converted to .csv for you.

Bear in mind that you may have to convert your own log files to a compliant file format for SQL querying. Bigquery accepts .csv or .parquet.

Files can easily be bulk-converted to another format via the command line. You can access the command line as follows on Windows:

Open the Windows Start menu

Type “command” in the search bar

Select “Command Prompt” from the search results

I’ve not tried this on a Mac, but I believe the CLI is located in the Utilities folder

Once opened, navigate to the folder containing the files you want to convert via this command:

CD 'path/to/folder’

Simply replace path/to/folder with your path.

Then, type the command below to convert e.g. .log files to .csv:

for file in *.log; do mv "$file" "$(basename "$file" .*0).csv"; done

Note that you may need to enable Windows Subsystem for Linux to use this Bash command.

Now that our log files are in, and in the right format, it’s time to start Pythoning!

Unleash the Python

Do I still need to present Python?!

According to Stack Overflow, Python is now the fastest-growing major programming language. It’s also getting incredibly popular in the SEO sphere, thanks to Python preachers like Hamlet or JR.

You can run Python on your local computer via Jupyter notebook or an IDE, or even in the cloud via Google Colab. We’ll use Google Colab in this article.

Remember, the notebook is here, and the code snippets are pasted below, along with explanations.

Import libraries + GCP authentication

We’ll start by running the cell below:

It imports the Python libraries we need and redirects you to an authentication screen.

There you’ll have to choose the Google account linked to your GCP project.

Connect to Google Cloud Storage (GCS) and BigQuery

There’s quite a bit of info to add in order to connect our Python notebook to GCS & BigQuery. Besides, filling in that info manually can be tedious!

Fortunately, Google Colab’s forms make it easy to parameterize our code and save time.

The forms in this notebook have been pre-populated for you. No need to do anything, although I do suggest you amend the code to suit your needs.

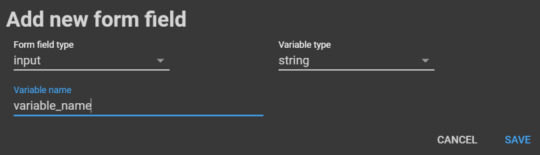

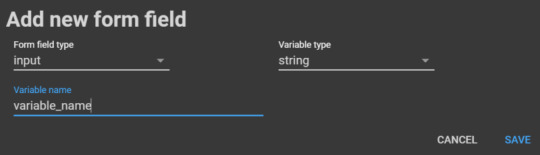

Here’s how to create your own form: Go to Insert > add form field > then fill in the details below:

When you change an element in the form, its corresponding values will magically change in the code!

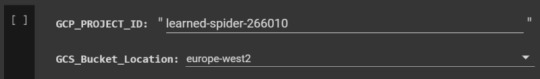

Fill in ‘project ID’ and ‘bucket location’

In our first form, you’ll need to add two variables:

Your GCP PROJECT_ID (mine is ‘learned-spider-266010′)

Your bucket location:

To find it, in GCP go to storage > browser > check location in table

Mine is ‘europe-west2′

Here’s the code snippet for that form:

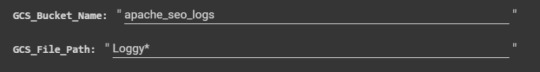

Fill in ‘bucket name’ and ‘file/folder path’:

In the second form, we’ll need to fill in two more variables:

The bucket name:

To find it, in GCP go to: storage > browser > then check its ‘name’ in the table

I’ve aptly called it ‘apache_seo_logs’!

The file path:

You can use a wildcard to query several files – Very nice!

E.g. with the wildcarded path ‘Loggy*’, Bigquery would query these three files at once:

Loggy01.csv

Loggy02.csv

Loggy03.csv

Bigquery also creates a temporary table for that matter (more on that below)

Here’s the code for the form:

Connect Python to Google Cloud Storage and BigQuery

In the third form, you need to give a name to your BigQuery table – I’ve called mine ‘log_sample’. Note that this temporary table won’t be created in your Bigquery account.

Okay, so now things are getting really exciting, as we can start querying our dataset via SQL *without* leaving our notebook – How cool is that?!

As log data is still in its raw form, querying it is somehow limited. However, we can apply basic SQL filtering that will speed up Pandas operations later on.

I have created 2 SQL queries in this form:

“SQL_1st_Filter” to filter any text

“SQL_Useragent_Filter” to select your User-Agent, via a drop-down

Feel free to check the underlying code and tweak these two queries to your needs.

If your SQL trivia is a bit rusty, here’s a good refresher from Kaggle!

Code for that form:

Converting the list output to a Pandas Dataframe

The output generated by BigQuery is a two-dimensional list (also called ‘list of lists’). We’ll need to convert it to a Pandas Dataframe via this code:

Done! We now have a Dataframe that can be wrangled in Pandas!

Data cleansing time, the Pandas way!

Time to make these cryptic logs a bit more presentable by:

Splitting each element

Creating a column for each element

Split IP addresses

Split dates and times

We now need to convert the date column from string to a “Date time” object, via the Pandas to_datetime() method:

Doing so will allow us to perform time-series operations such as:

Slicing specific date ranges

Resampling time series for different time periods (e.g. from day to month)

Computing rolling statistics, such as a rolling average

The Pandas/Numpy combo is really powerful when it comes to time series manipulation, check out all you can do here!

More split operations below:

Split domains

Split methods (Get, Post etc…)

Split URLs

Split HTTP Protocols

Split status codes

Split ‘time taken’

Split referral URLs

Split User Agents

Split redirected URLs (when existing)

Reorder columns

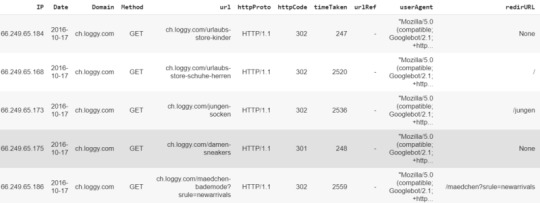

Time to check our masterpiece:

Well done! With just a few lines of code, you converted a set of cryptic logs to a structured Dataframe, ready for exploratory data analysis.

Let’s add a few more extras.

Create categorical columns

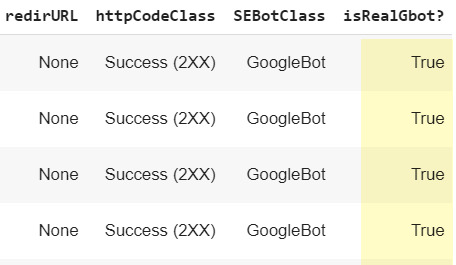

These categorical columns will come handy for data analysis or visualization tasks. We’ll create two, paving the way for your own experiments!

Create an HTTP codes class column

Create a search engine bots category column

As you can see, our new columns httpCodeClass and SEBotClass have been created:

Spotting ‘spoofed’ search engine bots

We still need to tackle one crucial step for SEO: verify that IP addresses are genuinely from Googlebots.

All credit due to the great Tyler Reardon for this bit! Tyler has created searchtools.io, a clever tool that checks IP addresses and returns ‘fake’ Googlebot ones, based on a reverse DNS lookup.

We’ve simply integrated that script into the notebook – code snippet below:

Running the cell above will create a new column called ‘isRealGbot?:

Note that the script is still in its early days, so please consider the following caveats:

You may get errors when checking a huge amount of IP addresses. If so, just bypass the cell

Only Googlebots are checked currently

Tyler and I are working on the script to improve it, so keep an eye on Twitter for future enhancements!

Filter the Dataframe before final export

If you wish to further refine the table before exporting to .csv, here’s your chance to filter out status codes you don’t need and refine timescales.

Some common use cases:

You have 12 months’ worth of log data stored in the cloud, but only want to review the last 2 weeks

You’ve had a recent website migration and want to check all the redirects (301s, 302s, etc.) and their redirect locations

You want to check all 4XX response codes

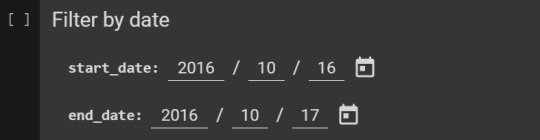

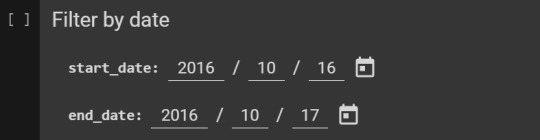

Filter by date

Refine start and end dates via this form:

Filter by status codes

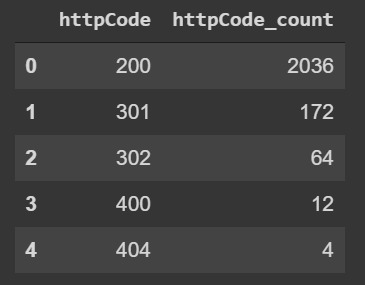

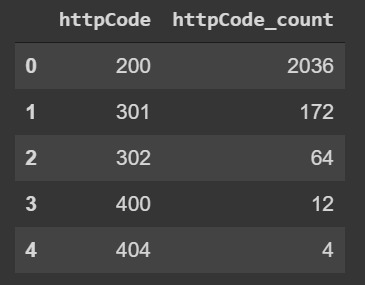

Check status codes distribution before filtering:

Code:

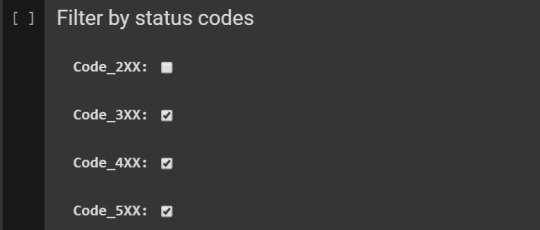

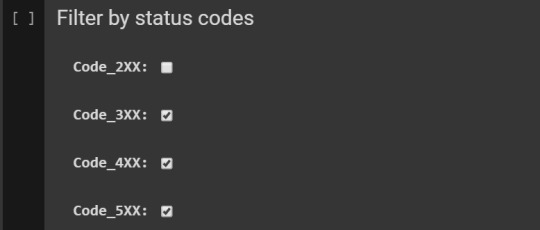

Then filter HTTP status codes via this form:

Related code:

Export to .csv

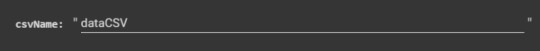

Our last step is to export our Dataframe to a .csv file. Give it a name via the export form:

Code for that last form:

Final words and shout-outs

Pat on the back if you’ve followed till here! You’ve achieved so much over the course of this article!

I cannot wait to take it to the next level in my next column, with more advanced data modeling/visualization techniques!

I’d like to thank the following people:

Tyler Reardon, who’s helped me to integrate his anti-spoofing tool into this notebook!

Paul Adams from Octamis and my dear compatriot Olivier Papon for their expert advice

Last but not least, Kudos to Hamlet Batista or JR Oakes – Thanks guys for being so inspirational to the SEO community!

Please reach me out on Twitter if questions, or if you need further assistance. Any feedback (including pull requests! :)) is also greatly appreciated!

Happy Pythoning!

This year’s SMX Advanced will feature a brand-new SEO for Developers track with highly-technical sessions – many in live-coding format – focused on using code libraries and architecture models to develop applications that improve SEO. SMX Advanced will be held June 8-10 in Seattle. Register today.

The post Leverage Python and Google Cloud to extract meaningful SEO insights from server log data appeared first on Search Engine Land.

Leverage Python and Google Cloud to extract meaningful SEO insights from server log data published first on https://likesandfollowersclub.weebly.com/

0 notes

Text

Leverage Python and Google Cloud to extract meaningful SEO insights from server log data

For my first post on Search Engine Land, I’ll start by quoting Ian Lurie:

Log file analysis is a lost art. But it can save your SEO butt!

Wise words.

However, getting the data we need out of server log files is usually laborious:

Gigantic log files require robust data ingestion pipelines, a reliable cloud storage infrastructure, and a solid querying system

Meticulous data modeling is also needed in order to convert cryptic, raw logs data into legible bits, suitable for exploratory data analysis and visualization

In the first post of this two-part series, I will show you how to easily scale your analyses to larger datasets, and extract meaningful SEO insights from your server logs.

All of that with just a pinch of Python and a hint of Google Cloud!

Here’s our detailed plan of action:

#1 – I’ll start by giving you a bit of context:

What are log files and why they matter for SEO

How to get hold of them

Why Python alone doesn’t always cut it when it comes to server log analysis

#2 – We’ll then set things up:

Create a Google Cloud Platform account

Create a Google Cloud Storage bucket to store our log files

Use the Command-Line to convert our files to a compliant format for querying

Transfer our files to Google Cloud Storage, manually and programmatically

#3 – Lastly, we’ll get into the nitty-gritty of Pythoning – we will:

Query our log files with Bigquery, inside Colab!

Build a data model that makes our raw logs more legible

Create categorical columns that will enhance our analyses further down the line

Filter and export our results to .csv

In part two of this series (available later this year), we’ll discuss more advanced data modeling techniques in Python to assess:

Bot crawl volume

Crawl budget waste

Duplicate URL crawling

I’ll also show you how to aggregate and join log data to Search Console data, and create interactive visualizations with Plotly Dash!

Excited? Let’s get cracking!

System requirements

We will use Google Colab in this article. No specific requirements or backward compatibility issues here, as Google Colab sits in the cloud.

Downloadable files

The Colab notebook can be accessed here

The log files can be downloaded on Github – 4 sample files of 20 MB each, spanning 4 days (1 day per file)

Be assured that the notebook has been tested with several million rows at lightning speed and without any hurdles!

Preamble: What are log files?

While I don’t want to babble too much about what log files are, why they can be invaluable for SEO, etc. (heck, there are many great articles on the topic already!), here’s a bit of context.

A server log file records every request made to your web server for content.

Every. Single. One.

In their rawest forms, logs are indecipherable, e.g. here are a few raw lines from an Apache webserver:

Daunting, isn’t it?

Raw logs must be “cleansed” in order to be analyzed; that’s where data modeling kicks in. But more on that later.

Whereas the structure of a log file mainly depends on the server (Apache, Nginx, IIS etc…), it has evergreen attributes:

Server IP

Date/Time (also called timestamp)

Method (GET or POST)

URI

HTTP status code

User-agent

Additional attributes can usually be included, such as:

Referrer: the URL that ‘linked’ the user to your site

Redirected URL, when a redirect occurs

Size of the file sent (in bytes)

Time taken: the time it takes for a request to be processed and its response to be sent

Why are log files important for SEO?

If you don’t know why they matter, read this. Time spent wisely!

Accessing your log files

If you’re not sure where to start, the best is to ask your (client’s) Web Developer/DevOps if they can grant you access to raw server logs via FTP, ideally without any filtering applied.

Here are the general guidelines to find and manage log data on the three most popular servers:

Apache log files (Linux)

NGINX log files (Linux)

IIS log files (Windows)

We’ll use raw Apache files in this project.

Why Pandas alone is not enough when it comes to log analysis

Pandas (an open-source data manipulation tool built with Python) is pretty ubiquitous in data science.

It’s a must to slice and dice tabular data structures, and the mammal works like a charm when the data fits in memory!

That is, a few gigabytes. But not terabytes.

Parallel computing aside (e.g. Dask, PySpark), a database is usually a better solution for big data tasks that do not fit in memory. With a database, we can work with datasets that consume terabytes of disk space. Everything can be queried (via SQL), accessed, and updated in a breeze!

In this post, we’ll query our raw log data programmatically in Python via Google BigQuery. It’s easy to use, affordable and lightning-fast – even on terabytes of data!

The Python/BigQuery combo also allows you to query files stored on Google Cloud Storage. Sweet!

If Google is a nay-nay for you and you wish to try alternatives, Amazon and Microsoft also offer cloud data warehouses. They integrate well with Python too:

Amazon:

AWS S3

Redshift

Microsoft:

Azure Storage

Azure data warehouse

Azure Synaps

Create a GCP account and set-up Cloud Storage

Both Google Cloud Storage and BigQuery are part of Google Cloud Platform (GCP), Google’s suite of cloud computing services.

GCP is not free, but you can try it for a year with $300 credits, with access to all products. Pretty cool.

Note that once the trial expires, Google Cloud Free Tier will still give you access to most Google Cloud resources, free of charge. With 5 GB of storage per month, it’s usually enough if you want to experiment with small datasets, work on proof of concepts, etc…

Believe me, there are many. Great. Things. To. Try!

You can sign-up for a free trial here.

Once you have completed sign-up, a new project will be automatically created with a random, and rather exotic, name – e.g. mine was “learned-spider-266010“!

Create our first bucket to store our log files

In Google Cloud Storage, files are stored in “buckets”. They will contain our log files.

To create your first bucket, go to storage > browser > create bucket:

The bucket name has to be unique. I’ve aptly named mine ‘seo_server_logs’!

We then need to choose where and how to store our log data:

#1 Location type – ‘Region’ is usually good enough.

#2 Location – As I’m based in the UK, I’ve selected ‘Europe-West2’. Select your nearest location

#3 Click on ‘continue’

Default storage class: I’ve had good results with ‘nearline‘. It is cheaper than standard, and the data is retrieved quickly enough:

Access to objects: “Uniform” is fine:

Finally, in the “advanced settings” block, select:

#1 – Google-managed key

#2 – No retention policy

#3 – No need to add a label for now

When you’re done, click “‘create.”

You’ve created your first bucket! Time to upload our log data.

Adding log files to your Cloud Storage bucket

You can upload as many files as you wish, whenever you want to!

The simplest way is to drag and drop your files to Cloud Storage’s Web UI, as shown below:

Yet, if you really wanted to get serious about log analysis, I’d strongly suggest automating the data ingestion process!

Here are a few things you can try:

Cron jobs can be set up between FTP servers and Cloud Storage infrastructures:

Gsutil, if on GCP

SFTP Transfers, if on AWS

FTP managers like Cyberduck also offer automatic transfers to storage systems, too

More data ingestion tips here (AppEngine, JSON API etc.)

A quick note on file formats

The sample files uploaded in Github have already been converted to .csv for you.

Bear in mind that you may have to convert your own log files to a compliant file format for SQL querying. Bigquery accepts .csv or .parquet.

Files can easily be bulk-converted to another format via the command line. You can access the command line as follows on Windows:

Open the Windows Start menu

Type “command” in the search bar

Select “Command Prompt” from the search results

I’ve not tried this on a Mac, but I believe the CLI is located in the Utilities folder

Once opened, navigate to the folder containing the files you want to convert via this command:

CD 'path/to/folder’

Simply replace path/to/folder with your path.

Then, type the command below to convert e.g. .log files to .csv:

for file in *.log; do mv "$file" "$(basename "$file" .*0).csv"; done

Note that you may need to enable Windows Subsystem for Linux to use this Bash command.

Now that our log files are in, and in the right format, it’s time to start Pythoning!

Unleash the Python

Do I still need to present Python?!

According to Stack Overflow, Python is now the fastest-growing major programming language. It’s also getting incredibly popular in the SEO sphere, thanks to Python preachers like Hamlet or JR.

You can run Python on your local computer via Jupyter notebook or an IDE, or even in the cloud via Google Colab. We’ll use Google Colab in this article.

Remember, the notebook is here, and the code snippets are pasted below, along with explanations.

Import libraries + GCP authentication

We’ll start by running the cell below:

It imports the Python libraries we need and redirects you to an authentication screen.

There you’ll have to choose the Google account linked to your GCP project.

Connect to Google Cloud Storage (GCS) and BigQuery

There’s quite a bit of info to add in order to connect our Python notebook to GCS & BigQuery. Besides, filling in that info manually can be tedious!

Fortunately, Google Colab’s forms make it easy to parameterize our code and save time.

The forms in this notebook have been pre-populated for you. No need to do anything, although I do suggest you amend the code to suit your needs.

Here’s how to create your own form: Go to Insert > add form field > then fill in the details below:

When you change an element in the form, its corresponding values will magically change in the code!

Fill in ‘project ID’ and ‘bucket location’

In our first form, you’ll need to add two variables:

Your GCP PROJECT_ID (mine is ‘learned-spider-266010′)

Your bucket location:

To find it, in GCP go to storage > browser > check location in table

Mine is ‘europe-west2′

Here’s the code snippet for that form:

Fill in ‘bucket name’ and ‘file/folder path’:

In the second form, we’ll need to fill in two more variables:

The bucket name:

To find it, in GCP go to: storage > browser > then check its ‘name’ in the table

I’ve aptly called it ‘apache_seo_logs’!

The file path:

You can use a wildcard to query several files – Very nice!

E.g. with the wildcarded path ‘Loggy*’, Bigquery would query these three files at once:

Loggy01.csv

Loggy02.csv

Loggy03.csv

Bigquery also creates a temporary table for that matter (more on that below)

Here’s the code for the form:

Connect Python to Google Cloud Storage and BigQuery

In the third form, you need to give a name to your BigQuery table – I’ve called mine ‘log_sample’. Note that this temporary table won’t be created in your Bigquery account.

Okay, so now things are getting really exciting, as we can start querying our dataset via SQL *without* leaving our notebook – How cool is that?!

As log data is still in its raw form, querying it is somehow limited. However, we can apply basic SQL filtering that will speed up Pandas operations later on.

I have created 2 SQL queries in this form:

“SQL_1st_Filter” to filter any text

“SQL_Useragent_Filter” to select your User-Agent, via a drop-down

Feel free to check the underlying code and tweak these two queries to your needs.

If your SQL trivia is a bit rusty, here’s a good refresher from Kaggle!

Code for that form:

Converting the list output to a Pandas Dataframe

The output generated by BigQuery is a two-dimensional list (also called ‘list of lists’). We’ll need to convert it to a Pandas Dataframe via this code:

Done! We now have a Dataframe that can be wrangled in Pandas!

Data cleansing time, the Pandas way!

Time to make these cryptic logs a bit more presentable by:

Splitting each element

Creating a column for each element

Split IP addresses

Split dates and times

We now need to convert the date column from string to a “Date time” object, via the Pandas to_datetime() method:

Doing so will allow us to perform time-series operations such as:

Slicing specific date ranges

Resampling time series for different time periods (e.g. from day to month)

Computing rolling statistics, such as a rolling average

The Pandas/Numpy combo is really powerful when it comes to time series manipulation, check out all you can do here!

More split operations below:

Split domains

Split methods (Get, Post etc…)

Split URLs

Split HTTP Protocols

Split status codes

Split ‘time taken’

Split referral URLs

Split User Agents

Split redirected URLs (when existing)

Reorder columns

Time to check our masterpiece:

Well done! With just a few lines of code, you converted a set of cryptic logs to a structured Dataframe, ready for exploratory data analysis.

Let’s add a few more extras.

Create categorical columns

These categorical columns will come handy for data analysis or visualization tasks. We’ll create two, paving the way for your own experiments!

Create an HTTP codes class column

Create a search engine bots category column

As you can see, our new columns httpCodeClass and SEBotClass have been created:

Spotting ‘spoofed’ search engine bots

We still need to tackle one crucial step for SEO: verify that IP addresses are genuinely from Googlebots.

All credit due to the great Tyler Reardon for this bit! Tyler has created searchtools.io, a clever tool that checks IP addresses and returns ‘fake’ Googlebot ones, based on a reverse DNS lookup.

We’ve simply integrated that script into the notebook – code snippet below:

Running the cell above will create a new column called ‘isRealGbot?:

Note that the script is still in its early days, so please consider the following caveats:

You may get errors when checking a huge amount of IP addresses. If so, just bypass the cell

Only Googlebots are checked currently

Tyler and I are working on the script to improve it, so keep an eye on Twitter for future enhancements!

Filter the Dataframe before final export

If you wish to further refine the table before exporting to .csv, here’s your chance to filter out status codes you don’t need and refine timescales.

Some common use cases:

You have 12 months’ worth of log data stored in the cloud, but only want to review the last 2 weeks

You’ve had a recent website migration and want to check all the redirects (301s, 302s, etc.) and their redirect locations

You want to check all 4XX response codes

Filter by date

Refine start and end dates via this form:

Filter by status codes

Check status codes distribution before filtering:

Code:

Then filter HTTP status codes via this form:

Related code:

Export to .csv

Our last step is to export our Dataframe to a .csv file. Give it a name via the export form:

Code for that last form:

Final words and shout-outs

Pat on the back if you’ve followed till here! You’ve achieved so much over the course of this article!

I cannot wait to take it to the next level in my next column, with more advanced data modeling/visualization techniques!

I’d like to thank the following people:

Tyler Reardon, who’s helped me to integrate his anti-spoofing tool into this notebook!

Paul Adams from Octamis and my dear compatriot Olivier Papon for their expert advice

Last but not least, Kudos to Hamlet Batista or JR Oakes – Thanks guys for being so inspirational to the SEO community!

Please reach me out on Twitter if questions, or if you need further assistance. Any feedback (including pull requests! :)) is also greatly appreciated!

Happy Pythoning!

This year’s SMX Advanced will feature a brand-new SEO for Developers track with highly-technical sessions – many in live-coding format – focused on using code libraries and architecture models to develop applications that improve SEO. SMX Advanced will be held June 8-10 in Seattle. Register today.

The post Leverage Python and Google Cloud to extract meaningful SEO insights from server log data appeared first on Search Engine Land.

Leverage Python and Google Cloud to extract meaningful SEO insights from server log data published first on https://likesfollowersclub.tumblr.com/

0 notes

Text

Customer Convos: The Google Cloud Team

This piece is a part of our Customer Convos series. We’re sharing stories of how people use npm at work. Want to share your thoughts? Drop us a line.

Q: Hi! Can you state your name and what you do, and what your company does?

Luke Sneeringer, SWE: Our company is Google.

How about this: what specifically are you doing? What does your team do?

LS: I am responsible for the authorship and maintenance of cloud client libraries in Python in Node.js.

Justin Beckwith, Product Manager: Essentially, Google has over 100 APIs and services that we provide to developers, and for each of those APIs and services we have a set of libraries we use to access them. The folks on this team help build the client libraries. Some libraries are automatically generated while others are hand-crafted, but for each API service that Google has, we want to have a corresponding npm module that makes it easy and delightful for Node.js users to use.

How’s your day going?

JB: My day’s going awesome! We’re at a Node conference, the best time of the year. You get to see all your friends and hang out with people that you only get to see here at Node.js Interactive and at Node Summit.

Today, we announced the public beta of Firestore, and of course we published an npm package. Cloud Firestore is a fully-managed NoSQL database, designed to easily store and sync app data at global scale.

Tell me the story of npm at your company. What specific problem did you have that private packages and Orgs solved?

JB: Google is a large company with a lot of products that span a lot of different spaces, but we want to have a single, consistent way for all of our developers to be able to publish their packages. More importantly, we need to have some sort of organizational mesh for the maintenance of those packages. For instance, if Ali publishes a package one day, and then tomorrow he leaves Google, we need to make sure we have the appropriate organization in place so that multiple people have the right access.

Ali Shiekh, SWE: We use npm Organization features to manage our modules and have teams set up to manage each of the distinct libraries that we have.

JB: We’re also users of some of the metrics that y’all produce. We use the number of daily installs for each module to measure adoption of our libraries and to figure out how they’re performing, not only against other npm modules but also other languages we support on the platform.

How do you consume that? Just logging into the website?

JB: No, we do a HTTP call, grab the response, and put it into BigQuery. Then we do analytics over that data in BigQuery and have it visualized on our dashboards.

How can private packages and orgs help you out?

JB: At Google, any time we release a product, there are four release phases that we go through, and the first is what we call an EAP, which is an “Early Access Preview” for a few hundred customers. When we’re distributing those EAPs, it can be difficult to get packages in the hands of customers. We don’t want to disclose a product that’s coming out, because we haven’t announced it yet, but we still need validation and feedback from people that we’ve built the right API and we have the right thing. Moving forward, that’s the way we’d like to use private packages.

What’s an improvement on our side that you would like to see? Or something you would like to see in the future?

LS: Something that we would be interested in seeing npm develop is the ability to have certain versions of a public package be private. Let’s say that we have 1.0, and there’s a 2.0 being developed in private that’s being EAP’ed.… I don’t think you have the concept yet of a private version of a public package.

Along with that, better management of package privacy. Managing an EAP for five people is very different than managing an EAP for 300 people. Another thing that would be nice would be the ability to give another npm Org access to a module at once.